DeepSeek AI Development: $1.6 Billion Spent, Debunking Affordability Myth

DeepSeek's chatbot, which introduced itself with the intriguing line "Hi, I was created so you can ask anything and get an answer that might even surprise you," has quickly emerged as a formidable competitor in the AI market. Its impact was so significant that it led to one of NVIDIA's largest stock price drops. This achievement is rooted in DeepSeek's innovative approach to AI model architecture and training methods.

DeepSeek's model stands out due to its use of several advanced technologies. The first is Multi-token Prediction (MTP), where the model predicts multiple words at once by analyzing different parts of a sentence. This not only improves accuracy but also enhances efficiency. Another key feature is the Mixture of Experts (MoE) architecture, which employs 256 neural networks, activating eight for each token processing task. This accelerates training and boosts performance. Lastly, Multi-head Latent Attention (MLA) focuses on crucial parts of a sentence, repeatedly extracting key details to capture important nuances in the input data.

Image: ensigame.com

Image: ensigame.com

DeepSeek, a prominent Chinese startup, claims to have developed this competitive AI model at a minimal cost. They state that they spent only $6 million on training DeepSeek V3, utilizing just 2048 graphics processors. However, analysts from SemiAnalysis have uncovered that DeepSeek operates a vast computational infrastructure, comprising around 50,000 Nvidia Hopper GPUs, including 10,000 H800 units, 10,000 H100s, and additional H20 GPUs. These resources are spread across multiple data centers and used for AI training, research, and financial modeling.

Image: ensigame.com

Image: ensigame.com

The company's total investment in servers is approximately $1.6 billion, with operational expenses estimated at $944 million. DeepSeek is a subsidiary of the Chinese hedge fund High-Flyer, which spun off the startup in 2023 to focus on AI technologies. Unlike most startups, DeepSeek owns its data centers, allowing full control over AI model optimization and faster innovation implementation. The company remains self-funded, enhancing its flexibility and decision-making speed.

Image: ensigame.com

Image: ensigame.com

Moreover, DeepSeek attracts top talent from leading Chinese universities, with some researchers earning over $1.3 million annually. Despite the company's claim of spending just $6 million on training, this figure only accounts for GPU usage during pre-training and excludes research expenses, model refinement, data processing, and infrastructure costs. Since its inception, DeepSeek has invested over $500 million in AI development. Its compact structure enables it to implement AI innovations actively and effectively.

Image: ensigame.com

Image: ensigame.com

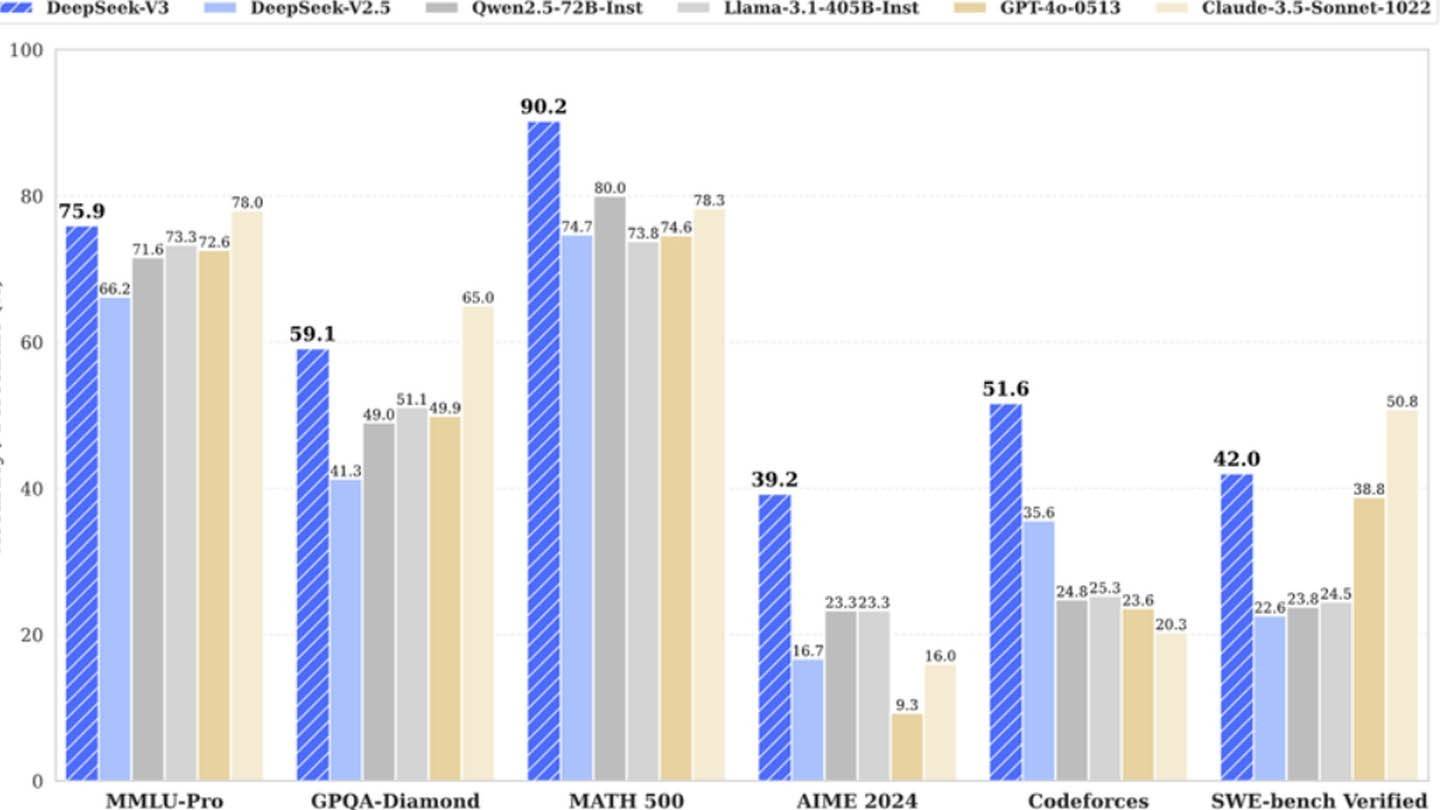

DeepSeek's example demonstrates that a well-funded, independent AI company can compete with industry leaders. However, experts note that the company's success is due to significant investments, technical breakthroughs, and a strong team, rather than a "revolutionary budget" for developing AI models. Despite this, DeepSeek's costs remain lower than those of its competitors; for instance, while DeepSeek spent $5 million on R1, ChatGPT4o cost $100 million to train.

-

Jan 27,25Roblox: Bike Obby Codes (January 2025) Bike Obby: Unlock Awesome Rewards with These Roblox Codes! Bike Obby, the Roblox cycling obstacle course, lets you earn in-game currency to upgrade your bike, buy boosters, and customize your ride. Mastering the various tracks requires a top-tier bike, and thankfully, these Bike Obby codes deliver

Jan 27,25Roblox: Bike Obby Codes (January 2025) Bike Obby: Unlock Awesome Rewards with These Roblox Codes! Bike Obby, the Roblox cycling obstacle course, lets you earn in-game currency to upgrade your bike, buy boosters, and customize your ride. Mastering the various tracks requires a top-tier bike, and thankfully, these Bike Obby codes deliver -

Feb 20,25Where to Preorder the Samsung Galaxy S25 and S25 Ultra Smartphones Samsung's Galaxy S25 Series: A Deep Dive into the 2025 Lineup Samsung unveiled its highly anticipated Galaxy S25 series at this year's Unpacked event. The lineup features three models: the Galaxy S25, S25+, and S25 Ultra. Preorders are open now, with shipping commencing February 7th. Samsung's web

Feb 20,25Where to Preorder the Samsung Galaxy S25 and S25 Ultra Smartphones Samsung's Galaxy S25 Series: A Deep Dive into the 2025 Lineup Samsung unveiled its highly anticipated Galaxy S25 series at this year's Unpacked event. The lineup features three models: the Galaxy S25, S25+, and S25 Ultra. Preorders are open now, with shipping commencing February 7th. Samsung's web -

Jan 11,25Jujutsu Kaisen Phantom Parade: Tier List Revealed This Jujutsu Kaisen Phantom Parade tier list helps free-to-play players prioritize character acquisition. Note that this ranking is subject to change with game updates. Tier List: Tier Characters S Satoru Gojo (The Strongest), Nobara Kugisaki (Girl of Steel), Yuta Okkotsu (Lend Me Your Stren

Jan 11,25Jujutsu Kaisen Phantom Parade: Tier List Revealed This Jujutsu Kaisen Phantom Parade tier list helps free-to-play players prioritize character acquisition. Note that this ranking is subject to change with game updates. Tier List: Tier Characters S Satoru Gojo (The Strongest), Nobara Kugisaki (Girl of Steel), Yuta Okkotsu (Lend Me Your Stren -

Jul 02,22Isophyne Debuts as Original Character in Marvel Contest of Champions Kabam introduces a brand-new original character to Marvel Contest of Champions: Isophyne. This unique champion, a fresh creation from Kabam's developers, boasts a striking design reminiscent of the film Avatar, incorporating copper-toned metallic accents. Isophyne's Role in the Contest Isophyne ent

Jul 02,22Isophyne Debuts as Original Character in Marvel Contest of Champions Kabam introduces a brand-new original character to Marvel Contest of Champions: Isophyne. This unique champion, a fresh creation from Kabam's developers, boasts a striking design reminiscent of the film Avatar, incorporating copper-toned metallic accents. Isophyne's Role in the Contest Isophyne ent